Introduction to 3D Rendering

This is a note to record my first game engine (NewbieGameEngine) developing experience in my spare time. The game engine is far to be completed but I can’t stop myself to share interesting things with you.

A little bit of story

Before this experience, I was always trying to understanding how a game engine coded up, but all I found is how to use specific rendering APIs (OpenGL, DirectX, etc.) or how to play with game engine already existed (Unity, Unreal, Godot, etc.).

Until some day, I found a great blog introducing how to code a game engine. This blog gave my a beautiful journey of C++ coding and finally I can’t believe I actually made my small prototype NewbieGameEngine.

Following I will record what I have learned in this period, and I hope it could minimize ones time to understand how a game engine coded up just like what I wonder in the past.

Overview

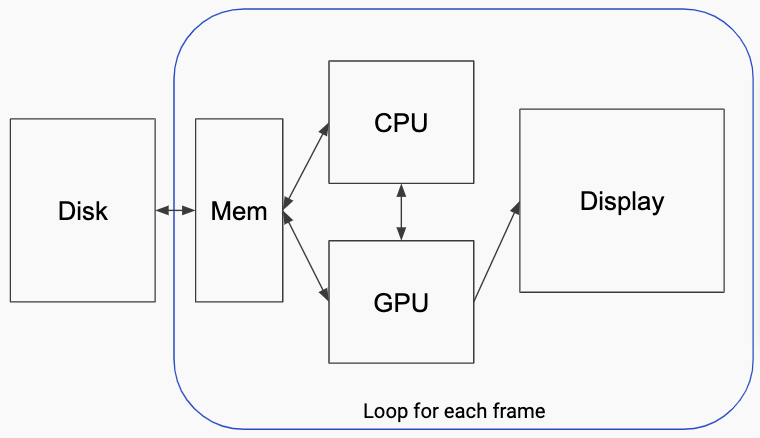

First let us see how hardwares work together for rending 3D space in display. I draw the image briefly to give a big image how data flow into display.

In a brief workflow, models and its assets are loaded to memory, then CPU processes the read data as well as handles user input to prepare all information (Vertices, Textures, Transformation matrices, etc.) as buffers for GPU to draw. Finally GPU reads buffers and draw them to display according to given rendering APIs instructions.

Rendering techniques

-

Rasterization pipeline

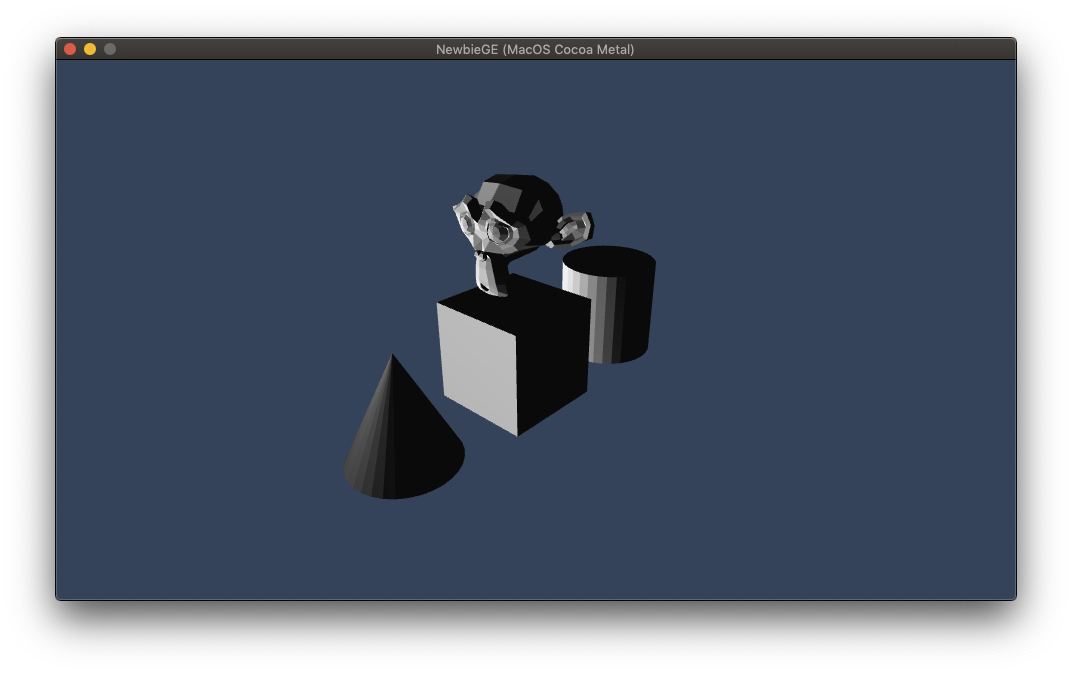

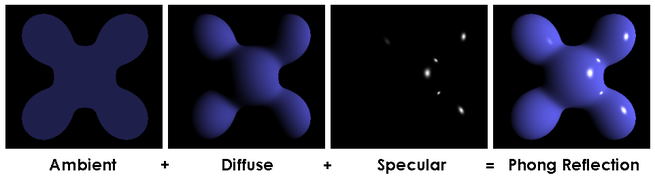

The most common rendering technique implemented by GPU is rasterization rendering. A basic result of rasterization is shown below (Phong model):

-

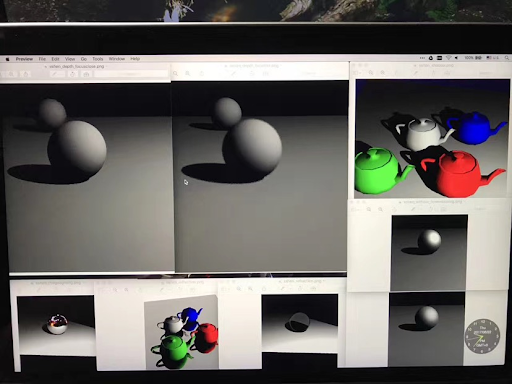

Ray tracing

And also, another technique is ray tracing. It trace every light ray along its course. Thus it have a lot of if-else judgements during rendering which is difficult for GPU to execute efficiently, as GPU is designed to perform mainly SIMD concurrency. Below is a naive ray tracing result only on CPU. (I touched ray tracing on university lecture called “Realistic Image Synthesis” in my Master course).

By ray tracing, it is easily and in a uniform way to implement focal length changing, shadows, reflection, refraction, etc. Which requires tricky ways in rasterization pipeline.

Workflow of rendering engine

The main workflow of rendering engine is simple:

- Create a window that handles user inputs

- Parse scene data from disk and form data structure in memory

- Format data and write it into buffers

- Upload buffers to GPU for drawing

- Prepare rendering pipeline for rendering API.

- Decide rendering model (Shader programs)

- Conpute object position & projection to camera (Basic math)

Here I will only explain step 4. You could refer other three directly to my code at article_34 tag because it varies to different code design.

1. Rendering pipeline

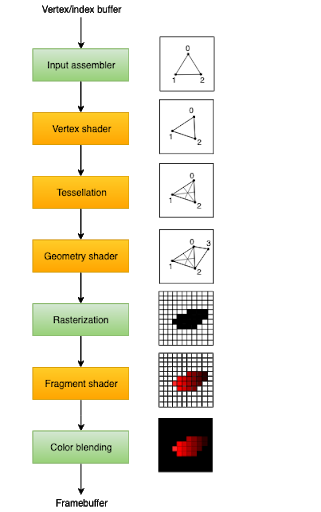

The rasterization rendering pipeline works as follow (Image from Khronos):

The green parts are step that GPU pipeline will automatically do for you. The most used (and required) steps are Vertex shader that work for changing each vertex and fragment shader that paint color for target screen pixel.

Tessellation is a step to incresing triangles in a triangle or a quadrilateral. This is very useful to create different level of detail (LOD) terrain.

Geometry shader is a step to create new vertex other than input vertex. It is very useful if you want to visulize object normal vectors. Here is a good article written about geometry shader

Rendering pipeline is implemented by rendering APIs, like OpenGL, Vulkan, Metal, DirectX, WebGL, etc.

2. Basic Math

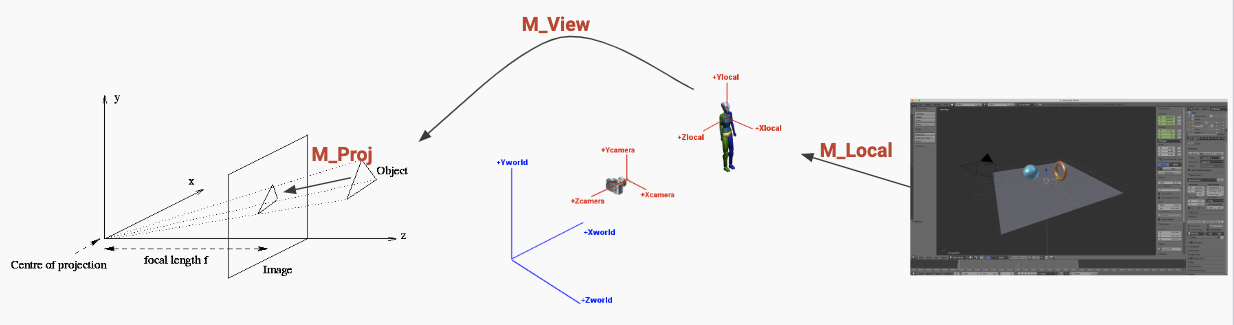

The basic math is how to project object to camera screen. There are three main step to do the projection:

- M_Local: To transform object local space to world space.

- M_View: To transform object from world into camera coordinates.

- M_Proj: To project 3D object to camera screen.

We could implement the basic math to vertex shader:

1 | struct PerFrameConstants |

3. Rendering model

The rendering model describes the way an object looks under light conditions. For example from the basic Phong model

to a more advanced Physically based rendering model (PBR):

Here we will introduce Phong model (Experience based not physically based) as an exemple. It contains threes parts:

- Ambient: uniform color for each pixel in object.

- Diffuse: diffuse light reflection

- Specular: specular light reflection

We could implement Phong model in a fragment shader (in Metal Shading Language):

1 | fragment float4 basic_frag_main(basic_vert_main_out in [[stage_in]], constant PerFrameConstants& pfc [[buffer(10)]]) |

Run the code

You could run the code by:

- clone article_34

- Open repo folder using VSCode

- Press “CMD+shift+p”

- Select “Run Task” and run “Cmake Test Release”

- Run task again “build Xcode project”

- Open generated xcode project and run “Editor” target

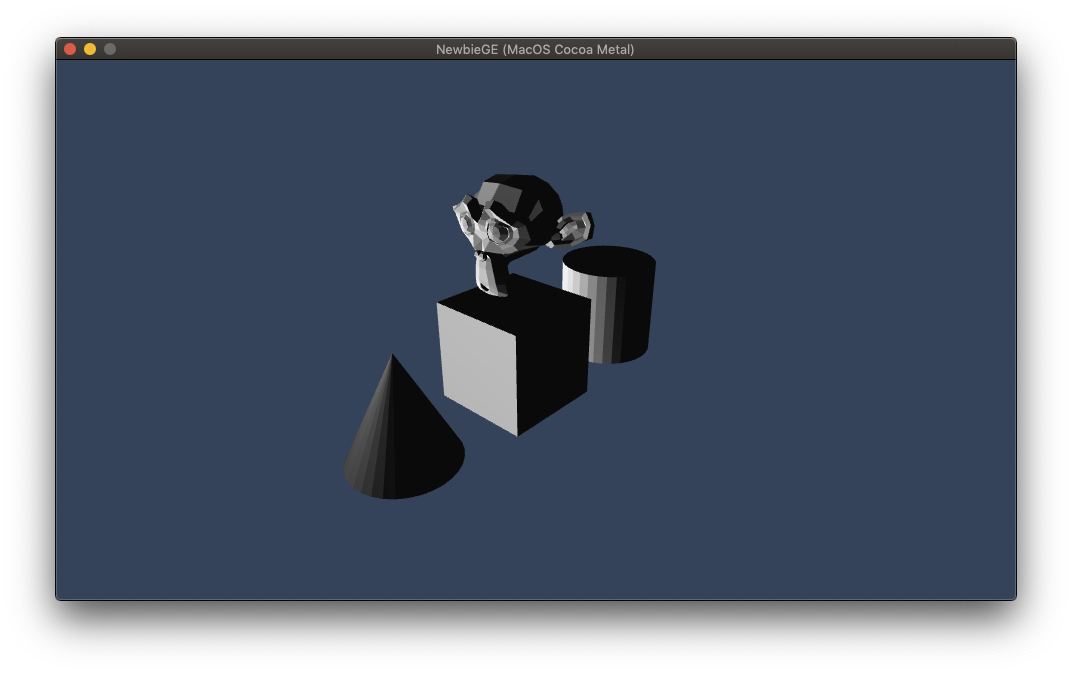

You would get following window if nothing failure:

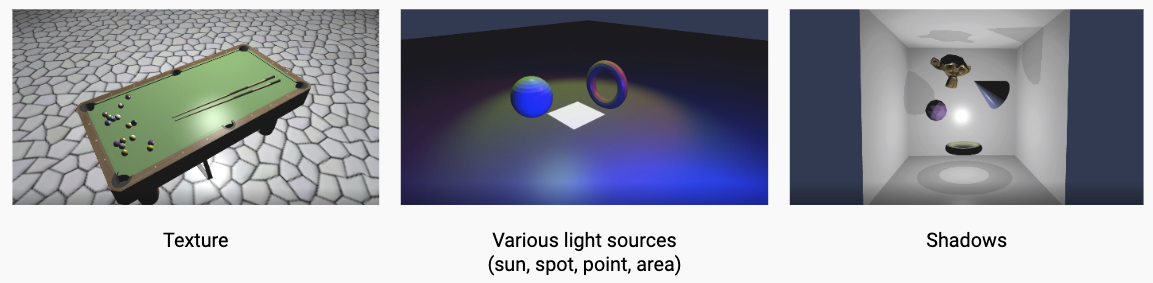

Extensions

Apart from the basic rendering, we could add more effect to our rendering engine like texture, shadows, etc.

These extensions and PBR model are probably introduced later.